Statistically, Facebook reported that it had disabled 2.2 billion fake accounts, minutes after the accounts were created. Among other things, it also told us that it had taken down 1.8 billion pieces of spam, 19.4 million pieces of adult nudity and sexual activity, 33.6 million pieces of content deemed to be violent, and 6.4 million pieces of terrorist and extremist propaganda. Facebook was also quick to point out that the majority of said posts were flagged down by its own surveillance technology more than 90% of the time. However, the social network also said that while its technology is capable of doing such a job, it also enlists the aid of its users to help flag down and report posts that may be considered provocative or inciteful by nature.

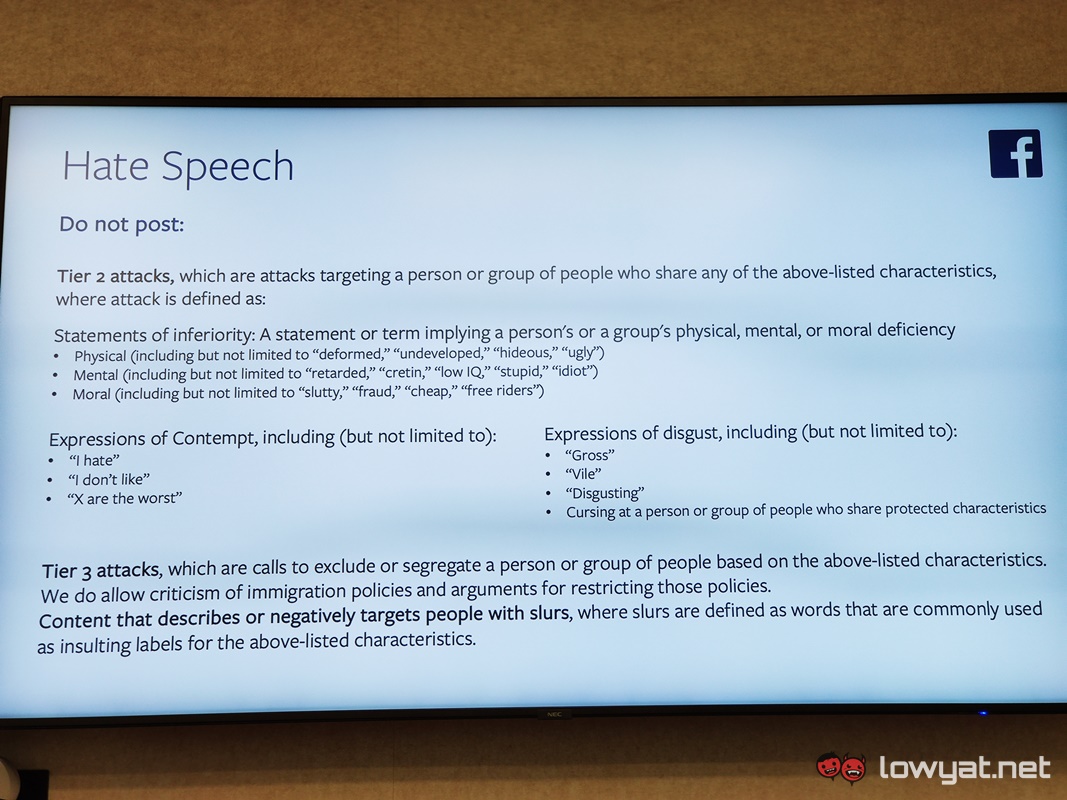

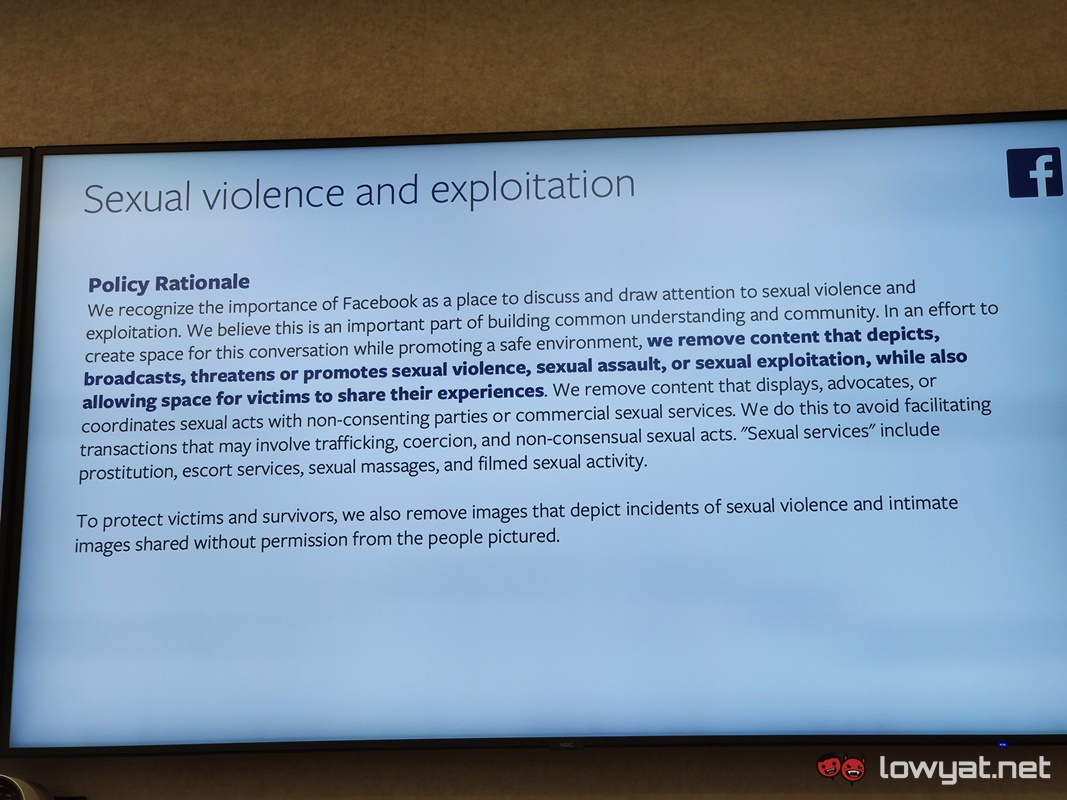

As a brief rundown, Facebook’s Community Standards comprise three core principle among a list of rules. These principles are Safety, Voice, and Equity. These principles are self-explanatory; the Safety principle obviously refers to its policies in keeping the community safe. The Voice principle refers to the freedom the platform provides its users, while the Equity principle ensures that Facebook’s policy applies to everyone, regardless of race, religion, or cultures. Facebook also explained its Community Standards regarding hate speech and posts pertaining to sexual violence or exploitation.In regards to the latter, Facebook says that as long as the content depicted “does not broadcast, threatens or promotes sexual violence, sexual assault, or sexual exploitation”, and allows users victim of such acts to share their experience, it will not flag down these posts.

Of course, it goes without saying that the line between what is deemed acceptable and unacceptable is a grey area, and sometimes Facebook won’t immediately pick up on the issue. But, as mentioned earlier, that’s also the reason why the social media platform reaches out to its users in flagging down inappropriate posts.